History of Dosing

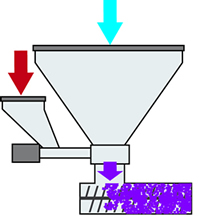

Early dosing (feeding) devices consisted of a metering device situated in a framework above the machine throat that allowed virgin and virgin/regrind materials to flow through it while dosing occurred. Although simple, inaccuracies were common since these primitive versions were difficult to calibrate and clean. The metering was inconsistent due to large variations in the metering technology. Augers were unrefined and the DC motors that drove them drifted erratically to a stop at the conclusion of metering.

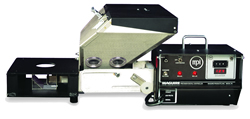

Future refinements for at-the-throat dosing devices introduced significantly improved calibration designs, permitting greater success and accuracy, along with improved augers and auger housings, which tightened up the metering accuracy. In addition, newer DC motors provided greater speed control and minimal drift once de-energized.

The size of the dosers was also reduced, allowing a smaller unit to be installed without sacrificing additive capacity and to allow easier cleaning, often times by complete removal of the storage hopper. The throat adaptor was now an important part of the mechanism and provided a void in the main material flow for metered material to be introduced as well as permitting more than one dosing unit to be used and allowing these units to be installed or removed easily. Manufacturers often designed these units with the metering auger metering “up hill” so that dribbles at the end of the auger were minimized, controlling undesirable over-dosing.

The common plagues of these dosing units continued, however:

- Calibration

- The accuracy limitations of volumetric dosing.

Common in the process was the auger, whose flights determined the “volume” of material that was transported from the supply hopper to the machine throat. At best, the dosing device assumed a relationship between the metered volume of the additive and its actual weight. As a result, the dosing device had to be calibrated to establish the relationship of volume to weight. The calibration process was typically a clumsy, afterthought task for the average processing worker. The dosing level often ended up wrong or at the very least over dosed “to be sure” a minimum of additive was introduced under any conditions. High costs from overdosing were the result and frequently bad parts. If the additive flow was in anyway altered (decreasing volume of additive in the supply hopper, bridging, changes in density, erratic DC motor operation, etc.) the dosing device had no way of detecting these issues and continued metering at the pace selected via calibration. Once again, bad parts and overdosing were often the results.

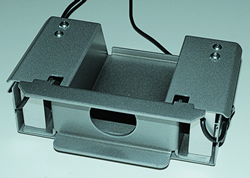

Changes in the science of dosing soon eliminated both of the two common headaches of at-the-throat metering with the introduction of gravimetric dosing. By actively measuring the loss in weight of the supply hopper, each turn of the metering auger could be closely monitored and modified to assure that exactly the right weight of additive was introduced with every shot (injection) or every second (extrusion).

The nuisance of calibration was no longer necessary since weight measurements were being actively taken at all times as a part of normal operation. This single change, coupled with the other refinements that have been instituted in dosing devices have once again made them a viable alternative for processors who wish to keep their equipment investments low and simple.

Plus, recent improvements in technology and controls have made these typically more costly gravimetric devices more attainable for the average processor. A prudent molder no longer needs to choose between low cost and precision.