With AI: Trust But Verify

A growing number view AI not as “artificial intelligence”, wholly supplanting the human with computing power, but rather as “augmented intelligence”, working alongside a person’s mind to its betterment not replacement.

Much like the automation of physical labor via robots and machinery, the automation of mental labor via computing power is promoting anxiety among some, often times understandably, and overexuberance among others, who try to shove AI and machine learning into areas they’re not ready to occupy.

During the recent IME West event in Anaheim, organized by Informa, Pat Baird, head of global software standards at Philips, discussed regulatory standards for AI in healthcare. Within this space, AI touches things as simple as virtual voice attendants on hospital phone systems up to programs that can diagnose disease without human assistance.

To frame the discussion, and in some ways the inevitability of where things are headed, Laird cited a paper published in the medical journal The Lancet in 2019 by Antonio Di Leva. Di Leva discussed an alternate view of AI that would “shift the paradigm from one of human-versus-machine, to human-and-machine,” writing that, “machines will not replace physicians, but physicians using AI will soon replace those not using it.”

Baird then discussed autonomous AI-based diagnostic system used to detect diabetic retinopathy. Built off AI-powered algorithms to detect the condition in images of a retina, the technology recently deployed machine learning to boost accuracy. In April 2018, FDA permitted marketing of the technology. In a release, the agency said:

“IDx-DR is the first device authorized for marketing that provides a screening decision without the need for a clinician to also interpret the image or results, which makes it usable by health care providers who may not normally be involved in eye care.”

From an instance where human intervention isn’t required, Baird pointed to instances where complete trust in AI systems lead people astray. First, during California’s wildfires in 2017, local police had to ask drivers to start using common sense and not traffic apps to direct their commutes after the programs pointed the users to areas engulfed in flames because those roadways currently had no traffic.

Next up, a 35 mph speed limit sign, only slightly modified by a small piece of tape, lead autonomous driving software to read it as 85 mph. A person would think twice about such a speed limit in what’s likely a residential area, but the the algorithm did not.

Finally, Laird offered up the example of autonomous vehicles stopping at yellow lights. While that follows the spirit of the law and is safer than gunning it through such intersections, it overlooks one thing: tailgaters. Where a person would likely glance at their rear view to make sure the driver behind him was equally committed to waiting for the light to change, AI wouldn’t make such a concession.

These examples brought Laird to a discussion of levels of autonomy. Using vehicles as an example, with 6 levels defined, level 0 is no automation with complete human manual control, while in Level 5 no human interaction or attention is needed and the vehicle is fully autonomous. In between are systems as simple as cruise control up to the automation of steering and acceleration, with human interaction/override possible in all but the final level.

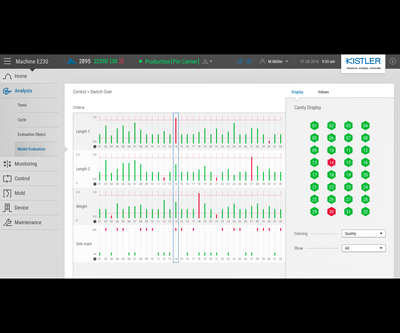

Within the injection molding space, multiple machine suppliers offer intelligent systems, which can shift process parameters on the fly in response to changes in ambient plant conditions or the material that are impacting part filling. Levels-of-autonomy-wise, these fall more to the cruise control scale of autonomous vehicles versus the no-human-interaction-required end of the spectrum, but it’s logical in the foreseeable future, that the operator could be removed from the equation.

Before that time comes, we need to think about those “yellow light” scenarios where we intrinsically understand a human’s thought process and interjection but an algorithm might not. “The challenge is that we forget areas that are second nature to us,” Laird said. We’d best remember those areas as we design AI systems for the future.

Can artificial intelligence augment human intelligence?

Related Content

Drones and Injection Molding Ready for Takeoff

Drones and unmanned aerial vehicles (UAV) are approaching an inflection point where their production volumes — and functionality — will increasingly point to injection molding.

Read MoreNew Technology Enables ‘Smart Drying’ Based on Resin Moisture

The ‘DryerGenie’ marries drying technology and input moisture measurement with a goal to putting an end to drying based on time.

Read MoreFive Ways to Increase Productivity for Injection Molders

Faster setups, automation tools and proper training and support can go a long way.

Read More'Smart,' Moisture-Based Drying Technology Enhanced

At NPE2024, Novatec relaunches DryerGenie with a goal to putting an end to drying based on time.

Read MoreRead Next

Injection Molding: Artificial Intelligence Predicts Part Quality

AI software “learns” via DOE the influence of process variables on part quality and applies that “learning” to make good/bad part determination in production.

Read MorePlastics Processing Machine Learning and Artificial Intelligence Framework Launched

Oden Technologies has added what it calls the industry’s first cloud and edge Machine Learning (ML) and Artificial Intelligence (AI) framework for manufacturing to its industrial automation platform.

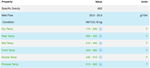

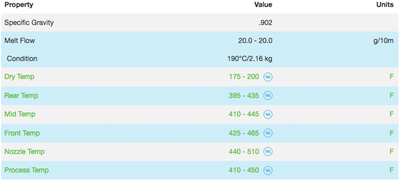

Read MoreMachine Learning Helps Software Fill Key Gaps in Plastics Data Sheets

MobileSpecs LLC has boosted the breadth and depth of its materials database for injection molders by applying a machine learning algorithm to predict missing information for key processing parameters, including data points like drying temperature.

Read More